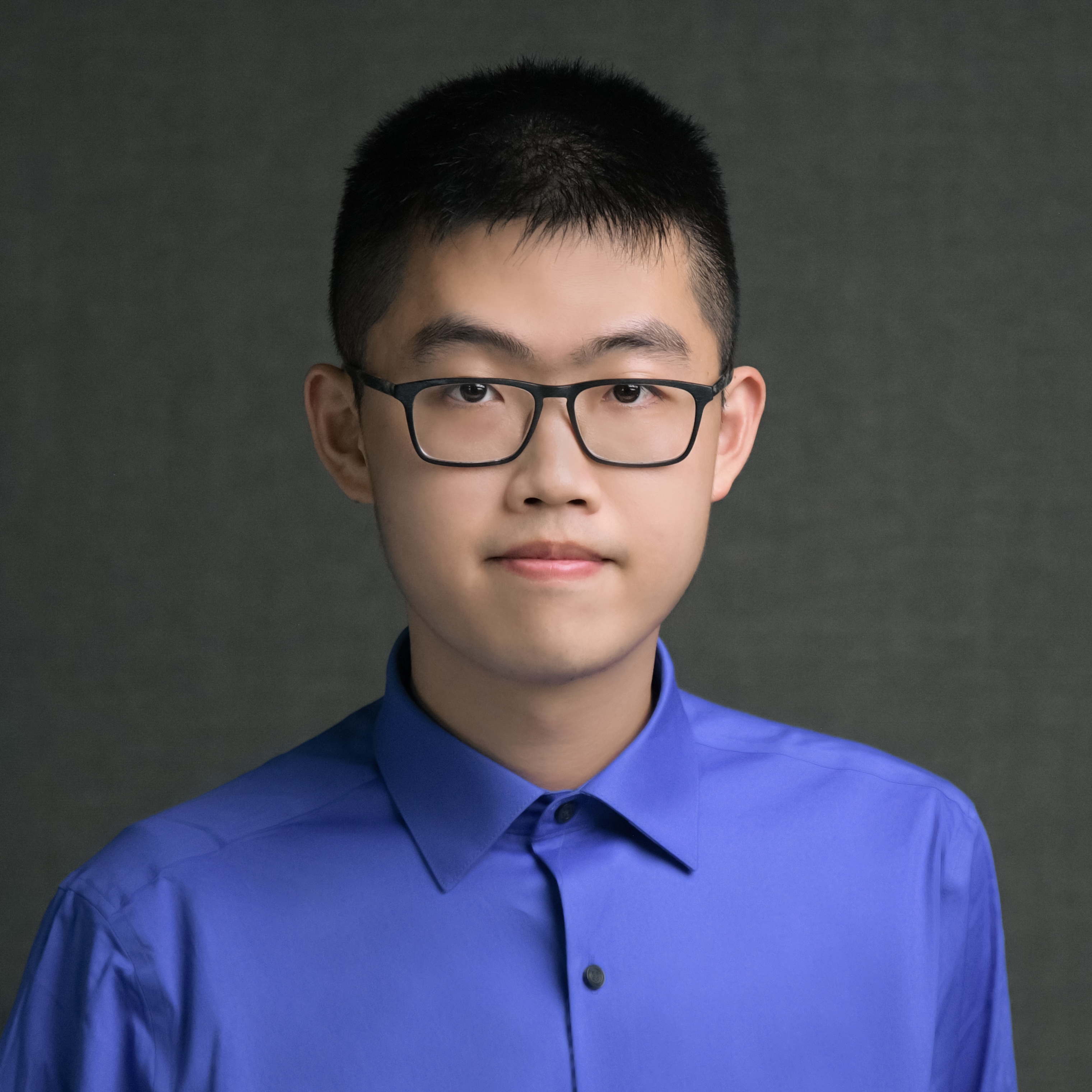

Xinya Du

- Assistant Professor of Computer Science

- University of Texas at Dallas

- Email: xinya.du@utdallas.edu / xinyadu8@gmail.com

- Office: ECSS 3.227

I am Xinya Du, a tenure-track assistant professor in the Department of Computer Science at the UT Dallas. My research focuses on in the area of Natural Language Processing (NLP), Large Language and Vision-Language Models (LLMs/VLMs), and Machine Learning (ML). I earned Ph.D. degree in Computer Science from Cornell University. After, I was a Postdoctoral Research Associate at the University of Illinois at Urbana-Champaign.

My work are published in leading NLP and ML conferences, and has been covered by Major Media and included in the list of Most Influential ACL Papers. I was named a Spotlight Rising Star in Data Science. I received the Amazon Research Award, Cisco Research Award, and 2024 NSF CAREER Award.

Publications | Advising | Teaching & Service | Curriculum Vitae

Recent

- Nov 2025 Attending NeurIPS Dec 3-6, shoot me an email for a coffee chat!

- Aug 2025 Excited to Receive a Grant on Control Evaluations for AI Debate from Open Philanthropy!

- Aug 2025 Four papers accepted by EMNLP 2025.

- Aug 2025 Our work on LLM efficient reasoning and LLM reproducing LLM research code are out.

- Mar 2025 We are holding The First Workshop on Multimodal Knowledge and Reasoning at IJCAI 2025.

- Feb 2025 Our paper Learning to Generate Research Idea won best paper award AAAI AI4Research 2025 🏆.

- Spe 2024 Two papers accepted by EMNLP 2024 and two papers accepted by NeurIPS 2024.

- Aug 2024 Our preprint of LLM research coding agent is out.

- Jan 2024 Received NSF CAREER Award.

- Jan 2024 Received Amazon Research Award.

- Nov 2023 FaithScore for LVLM hallucinations evaluation.

- Oct 2023 Three papers accepted to EMNLP 2023 main conference and findings.

- June 2023 Our preprint of LLM peer evaluation is out.

- May 2023 Released a post about LLMs licensing and restrictions.

- Feb 2023 Joining as a panelist on "ChatGPT: Fact vs Fiction", hosted by UTD and The Dallas Morning News.

- Feb 2023 Developed a new course: CS6301 Special Topics in Computer Science-Deep Learning for NLP.

- Jan 2023 Serving as an Area Chair (IE) for ACL 2023.

- July 2022 Attending NAACL 2022 in Seattle, WA.

- May 2022 Attending ACL 2022 Virtually.

- ...

Research Interests (Publications)

I enjoy exploring/building things that are novel and impactful (in research and life). My current research focuses on developing intelligent agents that can acquire, discover, and self-improve from new knowledge, while being trustworthy, explainable, and aligned with human values.

- Knowledge and Reasoning: I focus on enabling NLP systems and large language models (LLMs) to conduct faithful and explainable reasoning across modalities. This includes leveraging contextual and external knowledge in end-to-end models to improve faithful and factual behaviors, exploiting models to induce new rules and hypotheses, and understanding the reasoning capabilities of LLMs. We also design fine-grained evaluation methods for hallucinations in their knowledge reasoning process (e.g. FaithScore).

- Alignment, Safety, and Scalable Oversight: I design both alignment methodologies and evaluations to ensure LLMs behave safely and transparently. This includes developing scalable oversight and open-ended evaluations (e.g. PRD and IQA-Eval) to assess safety and robustness. I also create mechanisms for automatic and scalable feedback collection (e.g. FG-PRM, FGAIF) to enable models to self-correct and align with human values. Our work leads to real world impact such as in Nova AI Safety Challenge.

- LLM and Science (where "Science" includes General, Biomedical, etc.) / Computer Vision / Human–computer interaction : I develop LLM-based frameworks for generating scientific hypotheses, implementing and executing scientific experiments in an autonomous way. I focus on aligning LLMs with human values, and enable models (or humans) to understand community policies and behave accordingly.